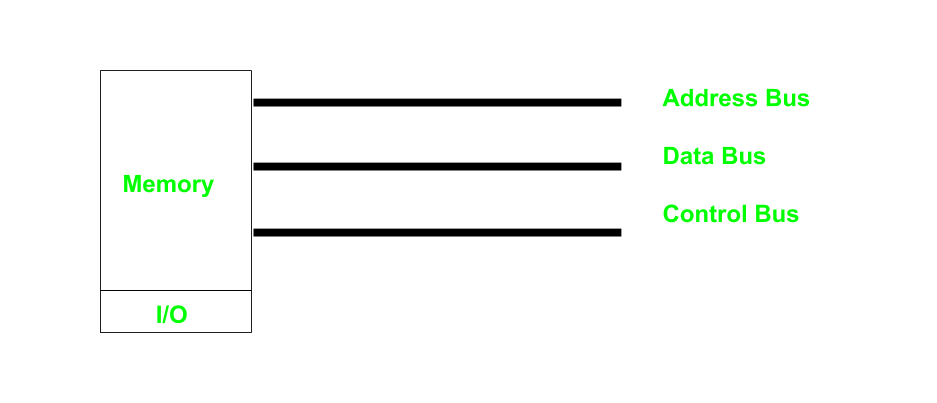

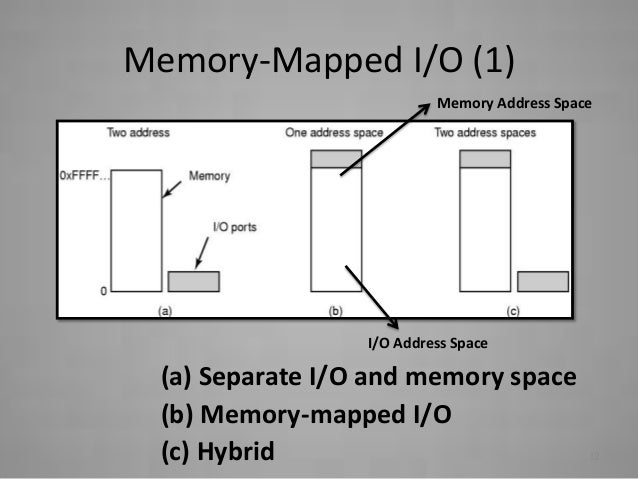

Now there becomes two ways for user to access that variable: One is using name of variable and another using address of variable (a pointer). When you create a variable, your compiler allocates unique memory location from RAM to that variable. “Memory Mapped IO” is a way to exchange data between them. peripherals and processor share same memory location. The Registers associated with GPIO’s or Peripherals are allocated with certain Memory Addresses which are mapped to your processor i.e. No, it's not a strict necessity (but it's much nicer if you're willing to accept the performance/complexity consequences).This tutorial will help you understand the Memory map of Peripherals or GPIO’s so called a “Memory Mapped IO” concept. If you look at the history (at least for 80x86) you'll find a gradual shift from "simple, primarily using IO ports" to "extremely complex, primarily using memory mapped IO". However, it turns out that a lot of devices can benefit from being able to access the memory bus directly (using bus mastering/DMA to transfer data between the device and RAM directly and not for memory mapped IO), so you mostly end up wanting that slower bus anyway, and you mostly end up wanting to deal with all the other complications too. It just sounds like an incredibly bad idea.

#MEMORY MAPPED IO SOFTWARE#

What if we slap a honking great mess right in the middle of the most performance critical data path (between CPU and RAM), so that software (device drivers) can access devices' internal registers using memory mapped IO instead? Oh boy.įor a start you'd be looking at some major complications for caches (at a minimum, some way to say "these area/s should be cached, and those area/s shouldn't be cached" with all the logic to figure out which accesses are/aren't cached, and its added latency) plus more major complications for the CPU itself (can/should it try to prefetch? Can/should it speculatively execute past a read/write?) plus slower (longer with more loads) and/or more expensive bus to handle "who knows what device/s might get plugged in", likely with some extra pain (wait states, etc) to deal devices being so much slower than memory. IO ports on 80x86) it all seems nice because you'd have separation between the high speed memory bus and all the (much slower) stuff.

Of course there's also a bunch of tricks to make it even faster, starting with caches (and maybe cache coherency), and prefetching, and speculative execution to keep the CPU busy while it's waiting for data.īy providing a dedicated mechanism (e.g. This communication needs to be extremely fast because everything depends on it and (for electronics) "fast" tends to mean simple, with short bus lengths (due to things like capacitance and resistance) and with the least loads (because each load increases the current needed to drive the bus, which means larger transistors to handle the higher current, which means more time taken for transistors to switch).

To understand the advantages/disadvantages it's best to think about the nature of communication between CPU/s and memory. There are 2 main ways that software (device drivers) can access a device's internal registers - by mapping those registers into the CPUs physical address space (the same way that memory is mapped into the CPUs physical address space) and by providing a dedicated mechanism (e.g. Most of these advantages are obscured from software, hence my argument that MMIO benefits the hardware designers.Įvery device has some sort of internal registers that software (device drivers) use to control the device's state, ask the device to do things, and determine status. No special instructions need to be supported by the CPU for IO.The CPUs can be performing useful work while data is being moved on the bus, instead of the cores waiting for each load/store to complete.CPU caching hardware can snoop transactions generated by IO to keep caches coherent.IO hardware can now directly retrieve data from RAM or other IO devices without the cores being involved mastering cycles.As a result the hardware designers can simply connect IO devices to this bus and the devices end up being accessed via the same mechanisms as RAM. Thus, transfers on the bus could go to different memory controllers, different cores, caches. The bus however is agnostic as to the source, destination and contents of the data. On a typical CPU the core(s) will all be connected to a common bus that allows the cores to read/write to main memory and perhaps shared caches. MMIO is another solution that has proven popular for simplifying hardware designs. There are many ways this could be done, such as a dedicated CPU instruction like IN/OUT on x86. Fundamentally, for a CPU to be able to perform IO it needs a way to read and write data to devices.

0 kommentar(er)

0 kommentar(er)